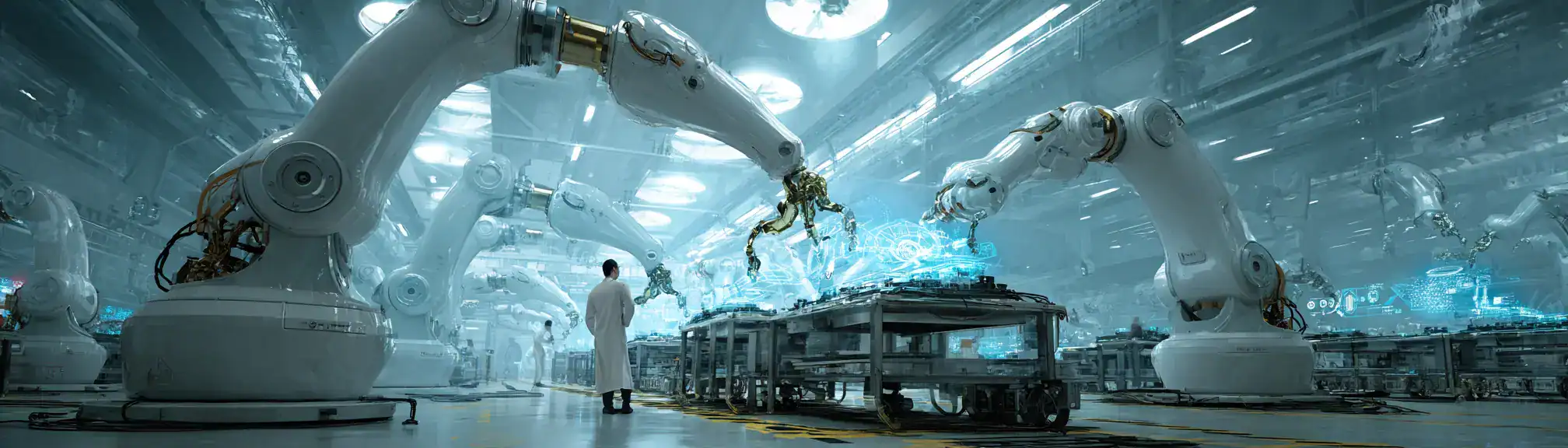

Recent advancements from DeepMind have showcased remarkable progress in AI-driven robotics. The technology company has developed AI agents that have demonstrated the ability to perform complex manipulation tasks with the same proficiency as, or even better than, human counterparts. This significant milestone brings practical applications of embodied AI within closer reach.

DeepMind is well-known for its innovative contributions to artificial intelligence, particularly in developing algorithms capable of solving intricate puzzles and games like Go and Chess. The latest achievement focuses on physical tasks, where the AI agents are programmed to perform actions such as grasping, sorting, and assembling objects to demonstrate their versatile problem-solving capabilities.

By fine-tuning their algorithms and incorporating advanced reinforcement learning techniques, DeepMind has made strides in enabling machines to interact more naturally with the physical world.

What sets this breakthrough apart is the combination of precision, adaptability, and generalisation exhibited by the AI agents. Unlike earlier robotic systems that required extensive pre-programming for specific tasks, these new agents can learn from experience and adapt to unfamiliar scenarios with minimal human input.

This opens the door to more autonomous robots that can operate in unstructured environments such as homes, warehouses, or disaster zones, where variability and unpredictability are the norm.

Moreover, this progress underscores the growing convergence between AI research and robotics hardware development. DeepMind’s work complements advancements in sensor technology, tactile feedback, and low-latency control systems, creating a more cohesive ecosystem for embodied AI.

As these systems evolve, they could play a transformative role in labour-intensive industries, helping to mitigate workforce shortages and improve operational safety. However, this also raises important questions about accountability, ethical deployment, and long-term socio-economic impact – topics that researchers and policymakers will need to address as these capabilities transition from labs to everyday use.

Key Data Points

- DeepMind has introduced Gemini Robotics, a family of advanced AI models for robots capable of performing complex manipulation tasks in real-world settings, matching or exceeding human proficiency.

- These models use vision-language-action (VLA) architectures, enabling robots to understand natural language commands and interact with their environments to achieve flexible task execution, even in unfamiliar or unstructured environments.

- Gemini Robotics can generalize skills and adapt to new tasks with as few as 50 to 100 demonstrations, outperforming previous models in benchmarks for generality, dexterity, and interactivity.

- Demonstrated capabilities include folding laundry, unzipping bags, industrial assembly, paper folding, grasping diverse objects, and responding to multi-step voice instructions.

- On-device deployment allows Gemini Robotics to run locally without requiring continuous network connectivity, reducing latency and enabling robust operation in challenging real-world scenarios.

- DeepMind’s algorithms are built on state-of-the-art reinforcement learning, vision-language modeling, and embodied reasoning, providing robots with the ability to learn from human demonstrations and quickly adapt to new problems.

- Safety and ethical considerations are a major focus, with DeepMind releasing datasets and benchmarks (like ASIMOV) to assess robot action safety and partnering with leading robotics companies for responsible deployment.

- These AI-driven advancements open pathways to more autonomous robots in domains ranging from household help and logistics to disaster response and manufacturing, potentially addressing workforce shortages and enhancing operational safety.

- The ongoing convergence of AI research and robotics hardware, including partnerships with firms like Apptronik and Boston Dynamics, is rapidly accelerating the commercial viability of embodied AI systems.

Reference Links

- Gemini Robotics On-Device brings AI to local robotic devices – Google DeepMind

- Gemini Robotics brings AI into the physical world – Google DeepMind

- Google DeepMind launches new AI models for robotics applications – National Technology News

- Google DeepMind introduces two Gemini-based models to bring AI to the real world – The Robot Report

- Google DeepMind Launches Gemini Robotics AI Model – Yahoo Finance

- Gemini Robotics: Google DeepMind aims for helpful AI robots – Edison Smart

Latest Tech and AI Posts

- DeepMind’s Breakthrough: AI Surpasses Humans in Robot Manipulation

- MIT Unveils AI for Detecting Heart Conditions Using Heart Sound Analysis

- Walmart Revamps AI Agent Strategy for Enhanced Efficiency

- Venture Capitalists Scale Back Investments in AI Startups

- Hyundai Glovis and Avikus Unveil Groundbreaking Autonomous Ships