As businesses increasingly rely on artificial intelligence to streamline operations, therenulls growing concern regarding the privacy and security of sensitive data.

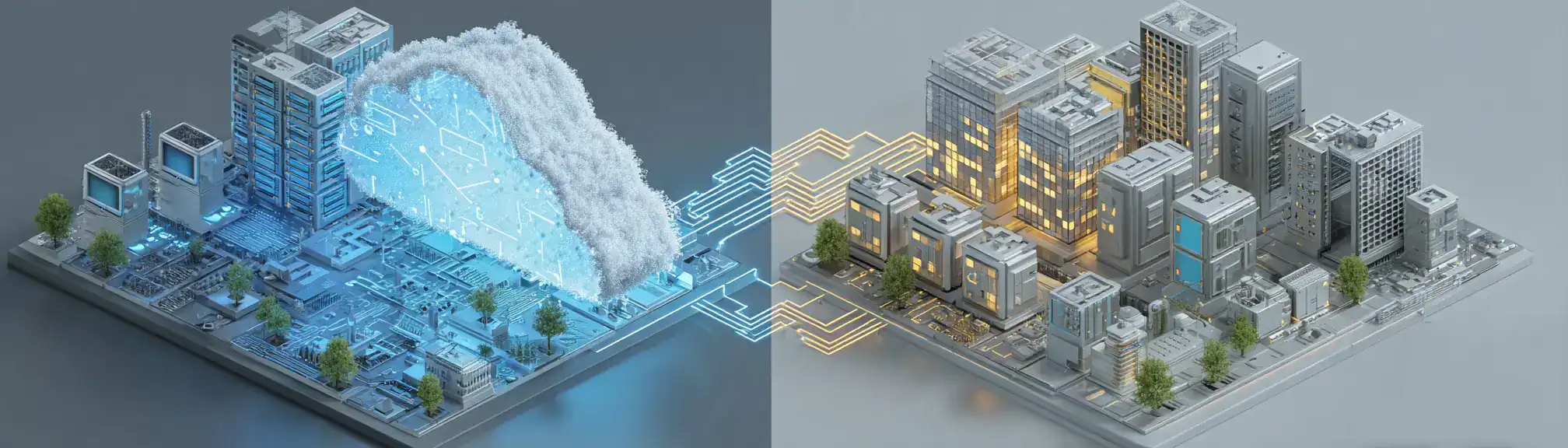

While cloud-based services like ChatGPT offer advanced capabilities, they also pose potential risks related to data breaches and compliance issues. A viable solution for many companies is to run AI models locally.

Deploying AI on-premise allows organisations to maintain tighter control over their data, ensuring that sensitive information remains within their IT infrastructure.

This strategy not only enhances privacy but also helps meet regulatory requirements more easily.

Advancements in open-source AI tools have made it possible for businesses to adopt robust AI solutions without relying heavily on external cloud services.

Tools such as TensorFlow, PyTorch, and local versions of models like GPT-3 are now more accessible, allowing teams to create customised AI applications that run on their own servers.

Cloud or On Prem

The choice between cloud-based and local AI solutions ultimately depends on a company’s specific needs and constraints.

However, for those prioritising data security and privacy, locally hosted AI models present a compelling option.

In addition to security advantages, on-premise AI deployment can lead to performance improvements, particularly in environments where latency and uptime are critical.

By processing data locally, organisations can reduce reliance on external networks and internet connectivity, enabling faster response times and more consistent performance.

This is especially valuable in sectors such as healthcare, finance, and manufacturing, where even minor delays can impact outcomes or operational efficiency.

Cost of AI Models

Another consideration is cost. While setting up and maintaining local infrastructure can require significant upfront investment, over time, it may prove more economical for companies with consistent, high-volume AI workloads.

Retaining control over model updates and data processing pipelines allows organisations to tailor solutions to their evolving needs without being tied to a vendor’s roadmap or pricing structure.

As AI deployment continues to mature, the strategy around cloud versus local solutions is likely to become an increasingly important part of enterprise IT planning.

Key Benefits of Local AI Deployment

- Enhanced Data Security and Privacy

- Local AI keeps sensitive data within your organization, reducing exposure to third-party breaches (OneClick IT Solution, 2025).

- In 2022, over 7.9 billion records were exposed in data breaches, much of it due to vulnerabilities in cloud storage (OneClick IT Solution, 2025).

- Local deployment helps meet strict regulatory requirements such as GDPR and HIPAA by ensuring data residency and full control (DockYard, 2025, SUSE, 2025).

- Reduced Data Breach Risk

- Nearly a third of all cyberattacks occur through third-party vectors; local AI minimizes this risk by preventing external data sharing (Netguru, 2024).

- Performance and Latency

- Local AI can deliver up to 50% faster search and retrieval times compared to cloud-based solutions (OneClick IT Solution, 2025).

- On-premise deployment is ideal for latency-critical environments such as healthcare diagnostics, financial fraud detection, and manufacturing automation (StorageSwiss, 2025).

- Cost and Budget Control

- While on-premises AI requires higher upfront investment for servers and infrastructure, it can be more cost-effective over time for organizations with stable, high-volume workloads (Lenovo, 2025, Verge.io, 2025).

- Public cloud AI costs can escalate quickly with usage, while local AI provides predictable budgeting and eliminates ongoing subscription fees (Verge.io, 2025).

- Customization and Flexibility

- Local AI allows for advanced configuration and seamless integration with existing business systems (OneClick IT Solution, 2025, SUSE, 2025).

- Open-source tools like TensorFlow, PyTorch, LocalAI, and deployment frameworks such as Ollama and BentoML enable robust, customizable AI solutions on local servers.

Use Cases for Local AI

- Healthcare: Securely process medical records and imaging for diagnostics, ensuring privacy and regulatory compliance (StorageSwiss, 2025).

- Finance: Internal fraud detection and compliance with PCI DSS and GDPR.

- Manufacturing: Real-time quality control and predictive maintenance.

- Legal and HR: Confidential document review and employee data analytics.

Challenges and Considerations

- Upfront Costs: Significant capital investment in hardware, facilities, and skilled personnel (SUSE, 2025, Uvation, 2025).

- Maintenance: Requires in-house expertise for system updates, security, and optimization.

- Scalability: Expanding capacity is slower and more expensive than in the cloud (Pluralsight, 2025).

References

- OneClick IT Solution: Why Install AI Model Locally, on Personal Cloud or On Premises (2025)

- SUSE: On-Premise AI – Control Your Data, Own Your Future (2025)

- Netguru: Lower Data Breaches and Security Risks with Local Language Models (2024)

- DockYard: The Business Case for Local AI (2025)

- StorageSwiss: Practical Use Cases for On-Premises AI (2025)

- Lenovo: On-Premise vs Cloud – Generative AI Total Cost of Ownership (2025)

- Verge.io: The ROI of On-Premises AI (2025)

- LocalAI: Open Source Local AI Platform

- Pluralsight: Cloud AI vs. On-Premises AI (2025)

- Uvation: Cost of AI Server – On-Prem, Data Centres, Hyperscalers (2025)

- n8n Blog: Open-Source AI Tools for Local Deployment (2025)